«Understanding A/B Testing Sample Size For Confidence and Profit

So you’ve spoken to your users and from their feedback you’ve come up with a new idea to increase your new customer conversion rate. Perhaps it’s a stronger call to action on the final checkout button or a simpler pricing page.

You get the new idea designed and then implement it with an A/B testing tool. You take it live and after a few days, the new version is winning.

Success!

Or is it?

You wouldn’t have declared victory after just a handful of people used each variation. So why do you consider your few days worth of data enough?

Sample size is a frequently misunderstood concept. Many people intuitively realize that some amount of sample size is needed for a test to be meaningful. However, when deciding how long to run a test, even seasoned SaaS and e-commerce professionals frequently go with their gut or just pick numbers that “feel” reasonable.

The consequences of going with your gut

When a test isn’t given enough sample size there are two possible consequences:

1.) An underperforming option is chosen as the winner. During the initial stages of many A/B tests, the lead can change back and forth between variations, sometimes frequently.

If you stop your test too early, you can easily be fooled by the short term randomness. This can cause you to go with the apparent “winner” which in the long term may be no better or possibly even worse than the control.

You can end up missing out on a lot of sales and who knows how long it’ll take you to realize your mistake.

2.) You abandon a variation that actually would have been better. In the early stages of an A/B test, sometimes the better variation appears worse for a number of days, sometimes drastically worse.

If you stop the test too soon, you might throw away a big improvement in conversion rate just because you didn’t have the patience to wait for the appropriate sample size.

In both situations, a potentially large amount of revenue is lost simply because enough sample size wasn’t given to the test.

So how much sample size do I need?

The first step in giving enough sample is deciding how much is needed in the first place. Unfortunately (for some of my readers), we’re going to need some math to figure this out.

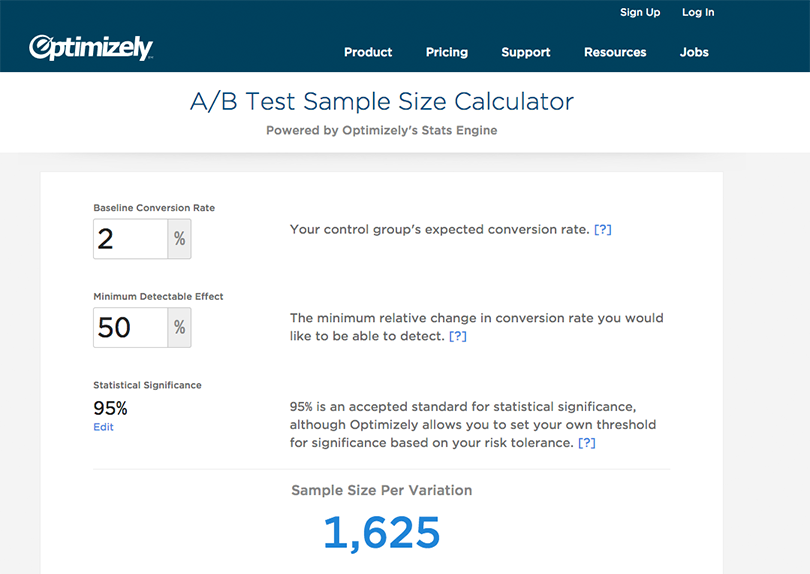

Luckily, the nice folks at Optimizely have done the math for us and created a free and easy to understand sample size calculator.

There are plenty of others but Optimizely’s is my favorite.

There are plenty of others but Optimizely’s is my favorite.

Before diving in, there are a few calculator inputs you’ll need to understand in order to use this tool to accurately determine how much sample size your A/B test needs.

Baseline Conversion Rate

This is the current conversion rate for your control group. So if your test is geared towards improving how many people complete your final credit card details form, this number is the current conversion rate for the form.

Minimum Detectable Effect

This number is how large of an improvement you want to be able to “detect.” For example, if you want to know if your new credit card form is at least 10% better (or worse) than the control variation, you’ll set this number to 10.

One important “consequence” of this number is that the smaller the change you attempt to detect, the larger the sample you’ll need. A 50% detectable difference takes far less sample size than a 10% difference.

If you’re on day 14 of a test and one variation is winning by 25%, you’ll input 25 into this form field to find out if you’ve reached enough sample size.

Statistical Significance

This number just denotes how sure you want to be that the test is accurate. A statistical significance of 95% means that 19/20 times, your test will be accurate. It takes a larger sample size to be 99% sure than it does to be 95% sure. 95% is a good default to start with.

Sample Size Per Variation

Once you enter the previously explained three inputs, you’ll see the number you’re looking for under “Sample Size Per Variation.” The key words here being Per Variation. If that number is 1,000 and you’re testing a control against one variation, you’ll need 2000 visits (1,000 for each) in order to have enough sample size.

Conquering that other barrier to proper sample size (You)

Now that you know how much sample size you need, the last step is to be patient and allow that sample size to accrue.

A/B tests can be emotional, especially if you’re relying on the improvements to hit revenue targets. You’re naturally going to want to read into the results of an early winner or loser and pull the trigger on a variation. It’s a common reaction I’ve seen over and over again.

But as I mentioned before, an itchy trigger finger can have big revenue consequences.

Results pacts

My first run-in with sample size was in my previous career as a professional poker player. All of the poker pros I knew (including me) were obsessed with our win rates, essentially how much money a player made per hand dealt. It was an ego thing. We boasted about our win rates to each other and lamented temporary (or not so temporary) dips. Many of us felt it was our key performance indicator.

My first run-in with sample size was in my previous career as a professional poker player. All of the poker pros I knew (including me) were obsessed with our win rates, essentially how much money a player made per hand dealt. It was an ego thing. We boasted about our win rates to each other and lamented temporary (or not so temporary) dips. Many of us felt it was our key performance indicator.

But poker is a complicated game with a lot of variance. This causes win rates to fluctuate greatly. It’s part of what makes poker a profitable game. Losing players can appear to be winners after tens or even hundreds of thousands of hands.

When we obsessed about our win rates, examining them after every session, it drove us batty and wasted a lot of precious mental and emotional energy we could have spent on improving our skills or enjoying life.

In order to deal with this problem, we made “no look pacts” where a group of pros would pledge to not look at our results for set periods of time, usually a few weeks.

Whenever I took part in one of these pacts, I felt a tremendous weight lifted from my shoulders. It took a lot of self-control, but it was always worth it. I played better and I enjoyed playing more which allowed me to play more hands at a higher win rate.

This technique also works with A/B testing. Based on your current traffic levels, I recommend determining how many days it’ll take to reach the sample size you need for your A/B test. Once you turn the test on, watch it for a few days to make sure the data is coming in correctly and there aren’t any obvious problems such as one variation having a 0% conversion rate.

Once you’re sure the test is running properly, put away the analytics until you approach the number of days needed to get your sufficient sample.

This will not only prevent you from pulling the trigger too soon on a variation, it will also save you much mental and emotional anguish during the early "swings" of a test.

I hope this blog post has helped you understand the importance of getting a proper sample size for your tests. If you have any questions about sample size or A/B testing, or if you're interested in improving your company’s testing culture, feel free to drop me a line at lennon@smoothconversion.com.

Want to know how well your web funnel is doing?

Enter your email address and website url and I’ll send you a free user friction report card that grades each step in your signup flow.